- Systemism - mindless belief in systems, that they can be made to function to achieve desired goals. The strange behavior (antics) of complex systems

- Systems Never Do What We Really Want Them to Do

- Malfunction is the rule and flawless operation the exception. Cherish your system failures in order to best improve

- The height and depth of practical wisdom lies in the ability to recognize and not to fight against the Laws of Systems. The most effective approach to coping is to learn the basic laws of systems behavior. Problems are not the problem; coping is the problem

- Systems don’t enjoy being fiddled with and will react to protect themselves and the unwary intervenor may well experience an unexpected shock

- Failure to function as expected is to be expected. It is a perfectly general feature of systems not to do what we expected them to do.

- "Anergy" is the unit of human effort required to bring the universe into line with human desires, needs, or pleasures. The total amount of anergy in the universe is constant. While new systems may reduce the problem it set out to, it also produces new problems.

- Once a system is in place, it not only persists but grows and encroaches

- Reality is more complex than it seems and complex systems always exhibit unexpected behavior. A system is not a machine. It’s behavior cannot be predicted even if you know it’s mechanism

- Systems tend to oppose their own proper functions. There is always positive and negative feedback and oscillations in between. The pendulum swings

- Systems tend to malfunction conspicuously just after their greatest triumph. The ghost of the old system continues to haunt the new

- People in systems do not do what the system says they are doing. In the same vein, a larger system does not do the same function as performed as the smaller system. The larger the system the less the variety in the product. The name is most emphatically not the thing

- To those within a system, the outside reality tends to pale and disappear. They are experiencing sensory deprivation (lack of contrasting experiences) and experience an altered mental state. A selective process occurs where the system attracts and keeps those people whose attributes are such as are attracted them to life in that system: systems abstract systems people

- The bigger the system, the narrower and more specialized the interface with individuals (SS number rather than dealing with a human)

- Systems delusions are the delusion systems that are almost universal in our modern world

- Designers of systems tend to design ways for themselves to bypass the system. If a system can be exploited, it will and any system can be exploited

- If a big system doesn’t work, it won’t work. Pushing systems doesn’t help and adding manpower to a late project typically doesn’t help. However, some complex systems do work and these should be left alone. Don’t change anything. A complex system that works is invariably found to have evolved from a simple system that worked. A complex system designed from scratch never works and can not be made to work. You have to start over, beginning with a working simple system. Few areas offer greater potential reward than understanding the transition from working simple system to working complex system

- In complex systems, malfunction and even total non function may not be detectable for long periods, if ever. Large complex systems tend to be beyond human capacity to evaluate. But whatever the system has done before, you can be sure it will do again

- The system is its own best explanation - it is a law unto itself. They develop internal goals the instant they come into being and these goals come first. Systems don't work for you or me. They work for their own goals and behaves as if it has a will to live

- Most large systems are operating in failure mode most of the time. So, it is important to understand how it fails, how it works when it's components aren't working well, how well does it work in failure mode. The failure modes can typically not be determined ahead of time and the crucial variables tend to be discovered by accident

- There will always be bugs and we can never be sure if they're local or not. Cherish these bugs, study them for they significantly advance you towards the path of avoiding failure. Life isn't a matter of just correcting occasional errors, bugs, or glitches. Error-correction is what we are doing every instant of our lives

- Form may follow function but don't count on it. As systems grow in size and complexity, they tend to lose basic functions (supertankers can't dock)

- Colossal systems cause colossal errors and these errors tend to escape notice. If it is grandiose enough, it may not even be comprehended as an error (50,000 Americans die each year in car accidents but it is not seen as a flaw of the transportation system, merely a fact of life.) Total Systems tend to runaway and go out of control

- In setting up a new system, tread softly. You may be disturbing another system that is actually working

- It is impossible not to communicate - but it isn't always what you want. The meaning of a communication is the behavior that results

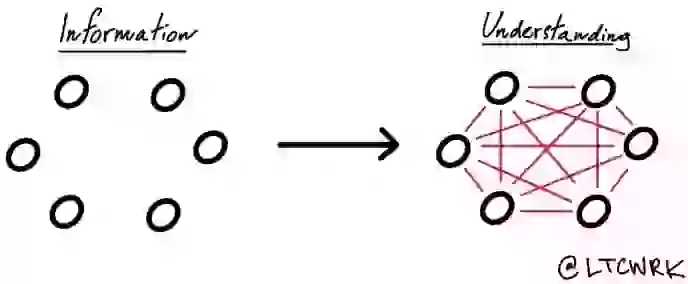

- Knowledge is useful in the service of an appropriate model of the universe, and not otherwise. Information decays and the most urgently needed information decays fastest. However, one system's garbage is another system's precious raw material. The information you have is not the information you want. The information you want is not the information you need. The information you need is not the information you can obtain.

- In a closed system, information tends to decrease and hallucination tends to increase

- What Can Be Done

- Inevitability-of-Reality Fallacy - things have to be the way they are and not otherwise because that's just the way they are. The person or system who has a problem and doesn't realize it has two problems, the problem itself and the meta-problem of unawareness

- Problem avoidance is the strategy of avoiding head-on encounters with a stubborn problem that does not offer a good point d'appui, or toe hold. It is the most under-rated of all methods of dealing with problems. Little wonder, for its practitioners are not to be found struggling valiantly against staggering odds, nor are they to be seen fighting bloody but unbowed, nor are they observed undergoing glorious martyrdom. They are simply somewhere else, successfully doing something else. Like Lao Tzu himself, they have slipped quietly away into a happy life of satisfying obscurity. The opposite of passivity is initiative, or responsibility - not energetic futility. Choose your systems with care. Destiny is largely a set of unquestioned assumptions

- Creative Tack - if something isn't working, don't keep doing it. Do something else instead - do almost anything else. Search for problems that can be neatly and elegantly solved with the resources (or systems) at hand. The formula for success is not commitment to the system but commitment to Systemantics

- The very first principle of systems-design is a negative one: do without a new system if you can. Two corollaries: do it with an existing system if you can; do it with a small system if you can.

- Almost anything is easier to get into than out of. Taking it down is often more tedious than setting it up

- Systems run best when designed to run downhill. In essence, avoid uphill configurations, go with the flow. In human terms, this means working with human tendencies rather than against them. Loose systems last longer and function better. If the system is built too tight it will seize up, peter out, or fly apart. Looseness looks like simplicity of structure, looseness in everyday functioning; "inefficiency" in the efficiency-expert's sense of the term; and a strong alignment with basic primate motivations

- Slack in the system, redundancy, "inefficiency" doesn't cost, it pays

- Bad design can rarely be overcome by more design, whether bad or good. In other words, plan to scrap the first system when it doesn't work, you will anyway

- Calling it "feedback" doesn't mean that it has actually fed back. It hasn't fed back until the system changes course. The reality that is presented to the system must also make sense if the system is to make an appropriate response. The sensory input must be organized into a model of the universe that by its very shape suggests the appropriate response. Too much feedback can overwhelm the response channels, leading to paralysis and inaction. The point of decision will be delayed indefinitely, and no action will be taken. Togetherness is great, but don't knock get-away-ness. Systems which don't know how much feedback there will be or which sources of feedback are critical, will begin to fear feedback and regard it as hostile, and even dangerous to the system. The system which ignores feedback has already begun the process of terminal instability. This system will be shaken to pieces by repeated violent contact with the environment it is trying to ignore. To try to force the environment to adjust to the system, rather than vice versa, is truly to get the cart before the horse

- What the pupil must learn, if he learns anything, is that the world will do most of the work for you, provided you cooperate with it by identifying how it really works and identifying with those realities. - Joseph Tussman

- Nature is only wise when feedbacks are rapid. Like nature, systems cannot be wise when feedbacks are unduly delayed. Feedback is likely to cause trouble if it is either too prompt or too slow. However, feedback is always a picture of the past. The future is no more predictable now than it was in the past, but you can at least take note of trends. The future is partly determined by what we do now and it's at this point that genuine leadership becomes relevant. The leader sees what his system can become. He has that image in mind. It's not just a matter of data, it's a matter of the dream. A leader is one who understands that our systems are only bounded by what we can dream. Not just ourselves, but our systems also, are such stuff as dreams are made on. It behooves us to look to the quality of our dreams

- Catalytic managership is based on the premise that trying to make something happen is too ambitious and usually fails, resulting in a great deal of wasted effort and lowered morale. On the other hand, it is sometimes possible to remove obstacles in the way of something happening. A great deal may then occur with little effort on the part of the manager, who nevertheless (and rightly) gets a large part of the credit. Catalytic managership will only work if the system is so designed that something can actually happen - a condition that commonly is not met. Catalytic managership has been practiced by leaders of genius throughout recorded history. Gandhi is reported to have said, "There go my people. I must find out where they are going, so I can lead them." Choosing the correct system is crucial for success in catalytic managership. Our task, correctly understood, is to find out which tasks our system performs well and use it for those. Utilize the principle of utilization

- The system itself does not solve problems. The system represents someone's solution to a problem. The problem is a problem precisely because it is incorrectly conceptualized in the first place, and a large system for studying and attacking the problem merely locks in the erroneous conceptualization into the minds of everyone concerned. What is required is not a large system, but a different approach. Solutions usually come from people who see in the problem only an interesting puzzle, and whose qualifications would never satisfy a select committee. Great advances do not come out of systems designed to produce great advances. Major advances take place by fits and starts

- Most innovations and advancements come from outside the field

- It is generally easier to aim at changing one or a few things at a time and then work out the unexpected effects, than to go to the opposite extreme, attempting to correct everything in one grand design is appropriately designated as grandiosity. In dealing with large systems, the striving for perfection is a serious imperfection. Striving for perfection produces a kind of tunnel-vision resembling a hypnotic state. Absorbed in the pursuit of perfecting the system at hand, the striver has no energy or attention left over for considering others, possibly better, ways of doing the whole thing

- Nipping disasters in the bud, limiting their effects, or, better yet, preventing them, is the mark of a truly competent manager. Imagination in disaster is required - the ability to visualize the many routes of potential failure and to plug them in advance, without being paralyzed by the multiple scenarios of disaster thus conjured up. In order to succeed, it is necessary to know how to avoid the most likely ways to fail. Success requires avoiding many separate possible causes of failure.

- In order to be effective, an intervention must introduce a change at the correct logical level. If your problem seems unsolvable, consider that you may have a meta problem

- Control is exercised by the element with the greatest variety of behavioral responses - always act so as to increase your options. However, we can never know all the potential behaviors of the system

- The observer effect - the system is altered by the probe used to test it. However, there can be no system without its observer and no observation without its effects

- Look for the self-referential point - that's where the problem is likely to be (nuclear armament leading to mutually assured destruction)

- Be weary of the positive feedback trap. If things seem to be getting worse even faster than usual, consider that the remedy may be at fault. Escalating the wrong solution does not improve the outcome. The author proposes a new word, "Escalusion" or "delusion-squared or D2", to represent escalated delusion

- If things are acting very strangely, consider that you may be in a feedback situation. Alternatively, when problems don't yield to commonsense solutions, look for the "thermostat" (the trigger creating the feedback)

- The remedy must strike deeply at the roots of the system itself to produce any significant effect

- Reframing is an intellectual tool which offers hope of providing some degree of active mastery in systems. A successful reframing of the problem has the power to invalidate such intractable labels as "crime", "criminal", or "oppressor" and render them as obsolete and irrelevant as "ether" in modern physics. When reframing is complete, the problem is not "solved" - it doesn't even exist anymore. There is no longer any problem to discuss, let alone a solution. If you can't change the system, change the frame - it comes to the same thing. The proposed reframing must be genuinely beneficial to all parties or it will produce a destructive kickback. A purported reframing which is in reality an attempt to exploit will inevitably be recognized as such sooner or later. The system will go into dense mode and all future attempts to communicate will be viewed as attempts to exploit, even when not so motivated

- Everything correlates - any given element of one system is simultaneously an element in an infinity of other systems. The fact of linkage provides a unique, subtle, and powerful approach to solving otherwise intractable problems. As a component of System a, element x is perhaps inaccessible. But as a component of System B, C, or D...it can perhaps be affected in the desired direction by intervening in System B, C, D...

- In order to remain unchanged, the system must change. Specifically, the changes that must occur are changes in the patterns of changing (or strategies) previously employed to prevent drastic internal change. The capacity to change in such a way as to remain stable when the ground rules change is a higher-order level of stability, which fully deserves its designation as Ultra-stability

What I got out of it

- A fun and sarcastic read about systems, their general behavior, how difficult they are to change, and much more.